Data Science capstone projects batch #24

by Ekaterina Butyugina

We would like to take a moment to give a big shout-out to all the students who joined us in November and fully committed themselves to excel in both the course and capstone projects.

In just three short months, the incredible data science enthusiasts from atch #24 in Zurich, as well as the accomplished fourth cohort from Munich, admirably tackled a diverse array of challenging projects. Their outstanding skills and unwavering dedication were prominently on display. This time, HP played a significant role in the students' success by providing us with exclusive Z by HP workstations.

We encourage you to witness firsthand the transformative power of data science as these individuals push boundaries, uncover insights, and drive meaningful impact.

Syrus assists industrial machinery manufacturers in enhancing their financial performance through value-based pricing. They maintain an extensive database of spare parts across various industries, where a crucial step involves grouping similar parts. Currently, this process is conducted manually, and Syrus is seeking automation solutions.

To automate the grouping of similar spare parts from a large database, Ria, Naveen, and Pedro obtained a dataset that included item names in both German and English, along with images for approximately 55% of the parts. The images featured varying backgrounds, and sizes, and could include unwanted elements such as rulers or measuring tapes. For an example, see the image below:

Figure 1. Data Sample

The workflow of the project had the following steps:

3. Clustering:

As a result, the team achieved over 70% overall performance, significantly improving Syrus' current manual process; it was found that combining text and image features yielded the best results; fine-tuning models before clustering further enhanced performance; performance may vary depending on the similarity of parts to the training data.

Figure 2. Results

Looking ahead, Ria, Naveen, and Pedro aim to integrate their solution seamlessly into Syrus' existing workflow. Additionally, they plan to delve deeper into understanding how the number of images per group affects the clustering results.

This project has successfully automated the process of grouping spare parts for Syrus, significantly enhancing both efficiency and accuracy. Although challenges in integration and potential variations in future performance exist, the project showcases the substantial potential of leveraging both text and image data for effective part clustering.

Dive into the cutting-edge realm of synthetic data as we embark on a journey to benchmark the capabilities of various data-generating algorithms. Quality synthetic data is pivotal, mirroring the complex patterns of genuine datasets while upholding stringent privacy standards. But what exactly makes synthetic data good, and what are the challenges associated with it?

Figure 1. Challenges

The evaluation of synthetic data generation focuses on three critical aspects. The first is fidelity: does the synthetic data accurately reflect the real data, preserving essential characteristics such as distributions and correlations? The second aspect is scalability: can the data generation process be scaled up efficiently and securely? The third and equally important aspect is privacy: does the synthetic data creation process safeguard against potential data leaks?

The team's objective was to conduct a benchmark analysis of Syntheticus's proprietary algorithm in comparison with other leading synthesis platforms, namely YData (GMM) and SDV (HMA). This evaluation was carried out in the context of multi-table synthesis, a particularly challenging scenario. They utilized a publicly available financial dataset comprising 8 tables (22,000 rows), characterized by complex inter-table relationships and a mix of categorical and numerical attributes.

Their methodology involved an in-depth examination of various combinations of the 8 tables, applying the Syntheticus, YData, and SDV algorithms to each combination to generate synthetic data. Through systematic experimentation, the team aimed to thoroughly assess the fidelity, scalability, and privacy performance of each algorithm, providing a comprehensive evaluation of their capabilities.

Figure 2. Results

Figure 1: Topic clustering visualization, showing topics with associated documents

The analysis pipeline consists of a mix of the GPT 3.5 model together with BERTopic. BERTopic leverages transformers and c-TF-IDF to generate dense clusters of similar documents, facilitating the interpretation of topics by retaining crucial terms within their descriptions.

Analysis blocks:

As a final step, the team created a Streamlit app, where one can explore their findings:

In conclusion, the team delivered insightful findings to Nestlé on several fronts, including Coffee Quality, Capsules, Machine Experience, Design, and Compostable Pods. Looking ahead, they plan to broaden the dataset, delve into outlier topics and critical areas for enhanced insights, and automate the workflow to make the pipeline more efficient, from data gathering to visualization. Leveraging data and technology, the team has empowered Nestlé to deeply connect with its customer base, grasp their core needs, and create a product that resonates with coffee enthusiasts around the globe.

Figure 1: Data

Figure 2: Results

Subsequently, a Streamlit app was created, which allows exploration of the model's performance, presenting metrics like accuracy, classification reports, confusion matrices, and the count of misclassified images for each category.

To address the issue of misclassifications, the team implemented Grad-CAM for the incorrectly classified images. This tool is invaluable as it clarifies which areas of an image a convolutional neural network (CNN) focuses on when making a prediction.

In summary, the move from manual to automated classification has markedly improved accuracy, from around 90% to up to 97%, while also helping to minimize manual effort. The system will be refined further through the integration of end-to-end labeling functionality.

Looking ahead, the next step involves employing generative AI to create images of other views from just a front-look image of an article, opening the door to more advanced and streamlined image processing capabilities.

Figure 1: AlpineSync App Screenshot

As we conclude this remarkable journey with Data Science Final Projects Group #24, we wish to extend our heartfelt gratitude to all the companies that have provided our students with invaluable projects. Your collaboration has not only enriched their learning experience but also paved the way for innovative solutions to real-world challenges. To the students who joined us in November and dedicated themselves wholeheartedly to completing the course and their final projects, we commend your outstanding efforts. Your dedication, skill, and passion for data science have truly shone through. We wish all the students the very best in their future endeavors. May you continue to push boundaries, innovate, and make a meaningful impact wherever your career may lead you.

For those inspired by these stories and interested in embarking on their own data science journey, we're excited to announce our upcoming bootcamp. Learn more about our data science program and how you can join the next cohort of students at Constructor Academy.

In just three short months, the incredible data science enthusiasts from atch #24 in Zurich, as well as the accomplished fourth cohort from Munich, admirably tackled a diverse array of challenging projects. Their outstanding skills and unwavering dedication were prominently on display. This time, HP played a significant role in the students' success by providing us with exclusive Z by HP workstations.

We encourage you to witness firsthand the transformative power of data science as these individuals push boundaries, uncover insights, and drive meaningful impact.

Cluster Constructor: Image and text-based clustering for industrial machine parts

Students: Maria Salfer, Naveen Chand Dugar, Pedro Iglesias

Syrus assists industrial machinery manufacturers in enhancing their financial performance through value-based pricing. They maintain an extensive database of spare parts across various industries, where a crucial step involves grouping similar parts. Currently, this process is conducted manually, and Syrus is seeking automation solutions.

To automate the grouping of similar spare parts from a large database, Ria, Naveen, and Pedro obtained a dataset that included item names in both German and English, along with images for approximately 55% of the parts. The images featured varying backgrounds, and sizes, and could include unwanted elements such as rulers or measuring tapes. For an example, see the image below:

Figure 1. Data Sample

The workflow of the project had the following steps:

- Data preprocessing:

- Remove items with missing names.

- Fill in missing names using corresponding names in the other language or manual intervention.

- Apply segmentation (LangSAM) to remove unwanted elements from images.

- Text Data: Used various Natural Language Processing techniques like Bag-of-Words, TF-IDF, and sentence transformers to extract information from item names.

- Image Data: Used Convolutional Neural Networks (ResNet50, VGG16) for feature extraction, also with fine-tuning on given images.

3. Clustering:

- Clustered parts based on:

- Text features only

- Image features only

- Combined features (text and image)

- Used k-means as well as a density-based clustering algorithm, DBSCAN, and removed noise-labeled data points for optimal results.

As a result, the team achieved over 70% overall performance, significantly improving Syrus' current manual process; it was found that combining text and image features yielded the best results; fine-tuning models before clustering further enhanced performance; performance may vary depending on the similarity of parts to the training data.

Figure 2. Results

Looking ahead, Ria, Naveen, and Pedro aim to integrate their solution seamlessly into Syrus' existing workflow. Additionally, they plan to delve deeper into understanding how the number of images per group affects the clustering results.

This project has successfully automated the process of grouping spare parts for Syrus, significantly enhancing both efficiency and accuracy. Although challenges in integration and potential variations in future performance exist, the project showcases the substantial potential of leveraging both text and image data for effective part clustering.

Algorithmic Synthetic Data Generation: Exploring Fidelity, Privacy, and Scalability

Students: Dàniel Kàroly, Justin Villard, Thomas Vong

Dive into the cutting-edge realm of synthetic data as we embark on a journey to benchmark the capabilities of various data-generating algorithms. Quality synthetic data is pivotal, mirroring the complex patterns of genuine datasets while upholding stringent privacy standards. But what exactly makes synthetic data good, and what are the challenges associated with it?

Figure 1. Challenges

The evaluation of synthetic data generation focuses on three critical aspects. The first is fidelity: does the synthetic data accurately reflect the real data, preserving essential characteristics such as distributions and correlations? The second aspect is scalability: can the data generation process be scaled up efficiently and securely? The third and equally important aspect is privacy: does the synthetic data creation process safeguard against potential data leaks?

The team's objective was to conduct a benchmark analysis of Syntheticus's proprietary algorithm in comparison with other leading synthesis platforms, namely YData (GMM) and SDV (HMA). This evaluation was carried out in the context of multi-table synthesis, a particularly challenging scenario. They utilized a publicly available financial dataset comprising 8 tables (22,000 rows), characterized by complex inter-table relationships and a mix of categorical and numerical attributes.

Their methodology involved an in-depth examination of various combinations of the 8 tables, applying the Syntheticus, YData, and SDV algorithms to each combination to generate synthetic data. Through systematic experimentation, the team aimed to thoroughly assess the fidelity, scalability, and privacy performance of each algorithm, providing a comprehensive evaluation of their capabilities.

Figure 2. Results

The evaluation of synthetic data generation focuses on three critical aspects. The first is fidelity: does the synthetic data accurately reflect the real data, preserving essential characteristics such as distributions and correlations? The second aspect is scalability: can the data generation process be scaled up efficiently and securely? The third and equally important aspect is privacy: does the synthetic data creation process safeguard against potential data leaks?

The team's objective was to conduct a benchmark analysis of Syntheticus's proprietary algorithm in comparison with other leading synthesis platforms, namely YData (GMM) and SDV (HMA). This evaluation was carried out in the context of multi-table synthesis, a particularly challenging scenario. They utilized a publicly available financial dataset comprising 8 tables (22,000 rows), characterized by complex inter-table relationships and a mix of categorical and numerical attributes.

Their methodology involved an in-depth examination of various combinations of the 8 tables, applying the Syntheticus, YData, and SDV algorithms to each combination to generate synthetic data. Through systematic experimentation, the team aimed to thoroughly assess the fidelity, scalability, and privacy performance of each algorithm, providing a comprehensive evaluation of their capabilities.

The team's objective was to conduct a benchmark analysis of Syntheticus's proprietary algorithm in comparison with other leading synthesis platforms, namely YData (GMM) and SDV (HMA). This evaluation was carried out in the context of multi-table synthesis, a particularly challenging scenario. They utilized a publicly available financial dataset comprising 8 tables (22,000 rows), characterized by complex inter-table relationships and a mix of categorical and numerical attributes.

Their methodology involved an in-depth examination of various combinations of the 8 tables, applying the Syntheticus, YData, and SDV algorithms to each combination to generate synthetic data. Through systematic experimentation, the team aimed to thoroughly assess the fidelity, scalability, and privacy performance of each algorithm, providing a comprehensive evaluation of their capabilities.

Customer thoughts: from reviews to recommendations for Nestlé Coffee Machine

Students: Elina Vigand, Danielle Lott, Alexandra Ciobîcă, Vahid Mamduhi

Nestlé, a global leader renowned for its high-quality food and beverages, recently introduced a groundbreaking coffee machine designed to revolutionize the brewing experience. However, gauging customer reactions to this innovation proved to be a complex challenge. The traditional method of manually analyzing a mere 20 reviews at a time led to slow processing times, limited data capture, and superficial insights.

To address this, a project was undertaken to develop an automated data analysis pipeline capable of processing hundreds of reviews within minutes, thereby unlocking a deeper understanding of customer opinions. The approach combined web scraping and API integrations to collect reviews from 10 different platforms across two countries, amassing a total of 357 reviews. These were then preprocessed and translated into a common language. Additionally, a "review reliability detector" was developed to sift out illegitimate reviews, which accounted for 17% of the total.

The team, consisting of Elina, Danielle, Alexandra, and Vahid, further refined the dataset by employing two methods for detecting duplicates: a preliminary check based on metadata and a more thorough review for highly similar content, identifying those with 70% or greater similarity. This meticulous process resulted in a clean and reliable dataset of 298 unique reviews.

Leveraging Natural Language Processing (NLP), the team extracted nuanced insights from the collected reviews. Initial sentiment analysis revealed a predominantly positive mood among customers, a promising indicator of the product's reception. The analysis delved deeper, identifying specific pros and cons mentioned in the reviews.

To polish the raw data and distill meaningful themes, the team utilized Large Language Models (LLMs). This sophisticated analysis generated 13 key topics, offering a comprehensive understanding of customer preferences and insights into the product's impact.

For those with a keen interest in the technical details behind this innovative approach, the following subchapter provides a deeper dive into the methodologies and technologies employed by the team. This project not only demonstrates the potential of combining advanced data analysis techniques but also underscores Nestlé's commitment to understanding and meeting customer needs through innovation.

Nestlé, a global leader renowned for its high-quality food and beverages, recently introduced a groundbreaking coffee machine designed to revolutionize the brewing experience. However, gauging customer reactions to this innovation proved to be a complex challenge. The traditional method of manually analyzing a mere 20 reviews at a time led to slow processing times, limited data capture, and superficial insights.

To address this, a project was undertaken to develop an automated data analysis pipeline capable of processing hundreds of reviews within minutes, thereby unlocking a deeper understanding of customer opinions. The approach combined web scraping and API integrations to collect reviews from 10 different platforms across two countries, amassing a total of 357 reviews. These were then preprocessed and translated into a common language. Additionally, a "review reliability detector" was developed to sift out illegitimate reviews, which accounted for 17% of the total.

The team, consisting of Elina, Danielle, Alexandra, and Vahid, further refined the dataset by employing two methods for detecting duplicates: a preliminary check based on metadata and a more thorough review for highly similar content, identifying those with 70% or greater similarity. This meticulous process resulted in a clean and reliable dataset of 298 unique reviews.

Leveraging Natural Language Processing (NLP), the team extracted nuanced insights from the collected reviews. Initial sentiment analysis revealed a predominantly positive mood among customers, a promising indicator of the product's reception. The analysis delved deeper, identifying specific pros and cons mentioned in the reviews.

To polish the raw data and distill meaningful themes, the team utilized Large Language Models (LLMs). This sophisticated analysis generated 13 key topics, offering a comprehensive understanding of customer preferences and insights into the product's impact.

For those with a keen interest in the technical details behind this innovative approach, the following subchapter provides a deeper dive into the methodologies and technologies employed by the team. This project not only demonstrates the potential of combining advanced data analysis techniques but also underscores Nestlé's commitment to understanding and meeting customer needs through innovation.

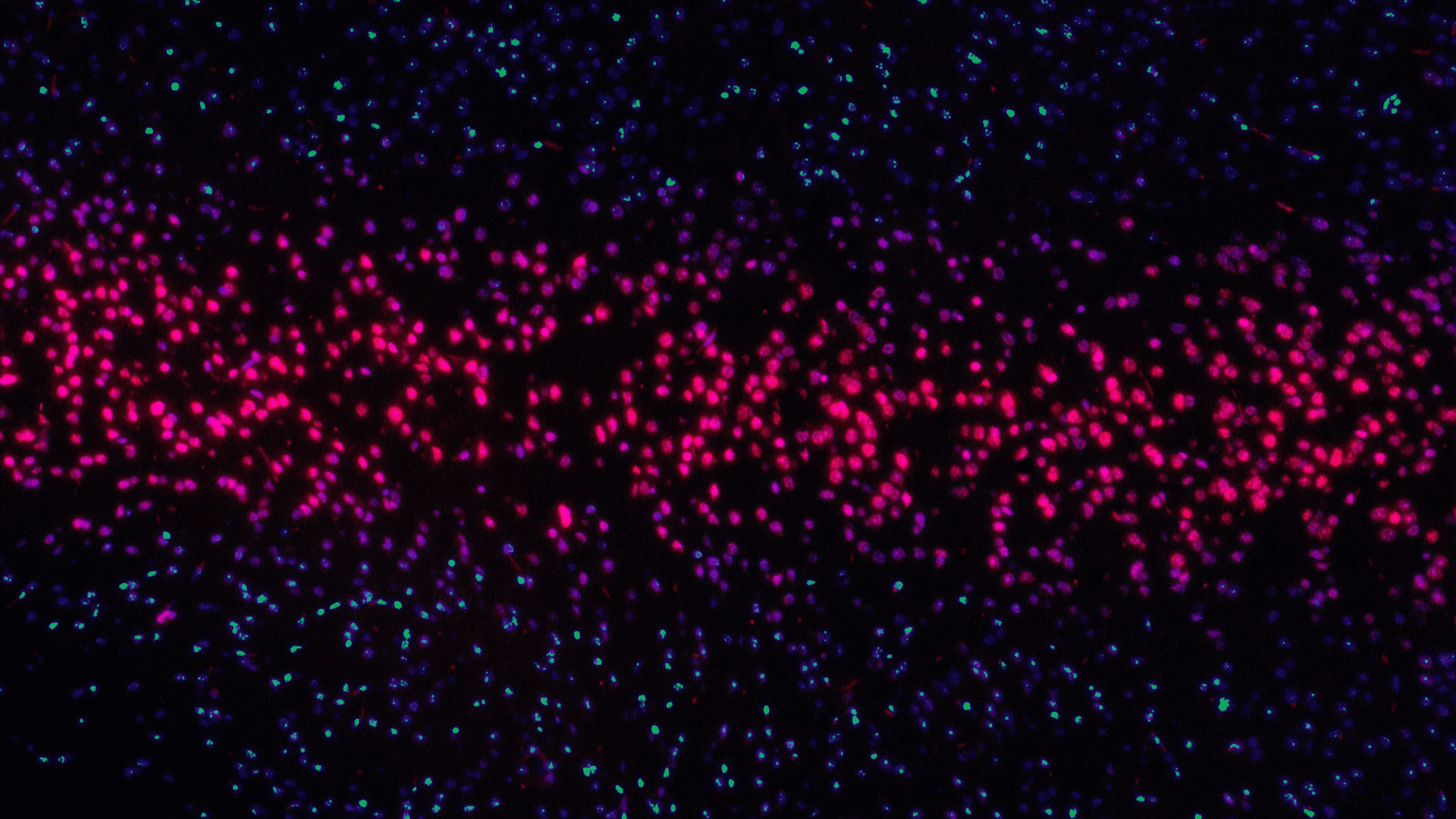

Figure 1: Topic clustering visualization, showing topics with associated documents

The analysis pipeline consists of a mix of the GPT 3.5 model together with BERTopic. BERTopic leverages transformers and c-TF-IDF to generate dense clusters of similar documents, facilitating the interpretation of topics by retaining crucial terms within their descriptions.

Analysis blocks:

- Generating sentiments using GPT 3.5 model

- Extracting pros and cons GPT 3.5 model (GPT-3.5-turbo-1106)

- Creating reproducible topics with the BERTopic algorithm.

- Identifying subtopics using the hierarchical clustering from BERTopic.

- Producing the final names by combining all the above-mentioned techniques.

As a final step, the team created a Streamlit app, where one can explore their findings:

- See where customers are raving and where concerns simmer across different platforms.

- Explore the topics customers care about most, both positive and negative.

- Curious about specific details? Just ask the ChatGPT-powered assistant! Get real-life examples and insights on any topic related to the collected data.

- Add the most recent reviews and see how they contribute to the overall picture.

In conclusion, the team delivered insightful findings to Nestlé on several fronts, including Coffee Quality, Capsules, Machine Experience, Design, and Compostable Pods. Looking ahead, they plan to broaden the dataset, delve into outlier topics and critical areas for enhanced insights, and automate the workflow to make the pipeline more efficient, from data gathering to visualization. Leveraging data and technology, the team has empowered Nestlé to deeply connect with its customer base, grasp their core needs, and create a product that resonates with coffee enthusiasts around the globe.

Bestsecret’s Best Secret - Take a Look Behind the Scenes of an Online Shop

Students: Anurag Chowdhury, Faezeh Nejati Hatamian, Tschimegma Bataa

According to current e-commerce Statistics, 93% of consumers consider visual appearance to be the key deciding factor in a purchasing decision, highlighting that customer experience is crucial for BestSecret's success. BestSecret is a members-only online shopping community offering premium and luxury brands at exclusive prices.

The focus of the project was on classifying all product images according to their type, where the team aimed to provide insights into which parts of the images influence the decision of the classification model, improving the accuracy and insight of image-based recommendations. BestSecret provided the product images across 4 different categories: Clothes, Shoes, Underwear and Bags. Each category is segmented into five classes, for example, the clothing category includes model look, front and back views, ghost, and zoomed details.

According to current e-commerce Statistics, 93% of consumers consider visual appearance to be the key deciding factor in a purchasing decision, highlighting that customer experience is crucial for BestSecret's success. BestSecret is a members-only online shopping community offering premium and luxury brands at exclusive prices.

The focus of the project was on classifying all product images according to their type, where the team aimed to provide insights into which parts of the images influence the decision of the classification model, improving the accuracy and insight of image-based recommendations. BestSecret provided the product images across 4 different categories: Clothes, Shoes, Underwear and Bags. Each category is segmented into five classes, for example, the clothing category includes model look, front and back views, ghost, and zoomed details.

Figure 1: Data

First, Anurag, Faezeh, and Tschimegma cleaned the data and pre-processed different datasets for the model training. The team trained several models, including a CNN baseline, ResNet50, EfficientNetB0, and InceptionNet-V3. Among these, ResNet50 delivered the best results.

Figure 2: Results

Subsequently, a Streamlit app was created, which allows exploration of the model's performance, presenting metrics like accuracy, classification reports, confusion matrices, and the count of misclassified images for each category.

To address the issue of misclassifications, the team implemented Grad-CAM for the incorrectly classified images. This tool is invaluable as it clarifies which areas of an image a convolutional neural network (CNN) focuses on when making a prediction.

In summary, the move from manual to automated classification has markedly improved accuracy, from around 90% to up to 97%, while also helping to minimize manual effort. The system will be refined further through the integration of end-to-end labeling functionality.

Looking ahead, the next step involves employing generative AI to create images of other views from just a front-look image of an article, opening the door to more advanced and streamlined image processing capabilities.

AlpineSync: Elevating the ski experience with data science and machine learning

Students: Oluwatosin Aderanti, Paul Biesold, Raphael Penayo Schwarz, Sebastian Rozo

As skiers navigate the slopes of the world's ski resorts, their experiences have evolved into data-centric adventures. They are seeking a deeper understanding of their performance by utilizing technology to access detailed analytics and performance metrics. This implies monitoring progress, evaluating achievements, and capturing key moments.

The company Alturos Destinations specializes in crafting and implementing state-of-the-art digitalization strategies that redefine the landscape of tourism. The Skiline App revolutionizes the skiing experience by capturing and analyzing skiers' data to provide personalized statistics and memories of their mountain adventures.

As skiers navigate the slopes of the world's ski resorts, their experiences have evolved into data-centric adventures. They are seeking a deeper understanding of their performance by utilizing technology to access detailed analytics and performance metrics. This implies monitoring progress, evaluating achievements, and capturing key moments.

The company Alturos Destinations specializes in crafting and implementing state-of-the-art digitalization strategies that redefine the landscape of tourism. The Skiline App revolutionizes the skiing experience by capturing and analyzing skiers' data to provide personalized statistics and memories of their mountain adventures.

- The business challenge: to provide an alternative to existing manual lift ticket scanning, particularly in instances where scanning machines are unavailable.

- The idea behind: utilize sensor data from skier’s mobile devices to automatically track ski-lift usage within the Alturos Skiline mobile app. This can serve as an additional way to collect data when ticket scanners are inoperational, and could potentially replace them altogether. These machine-learning@constructor.org algorithms will enable real-time data analysis on mobile devices and differentiate skier’s utilization of various ski lifts.

- The approach: Our endeavor focuses on harnessing sensor data from mobile devices to accurately identify ski lift usage.

- Incoming data: Tosin, Raphael, Paul, and Sebastian utilized sensor data from two popular mobile apps: Sensor Logger for iOS devices and Sensors Toolbox for Android devices. This data formed the foundation for the lift detection algorithm.

- Data management: To train their models effectively, the team utilized labeled data for supervised machine learning from 12 different skiing sessions and two locations. Datasets were then prepared by incorporating outlier detection and engineered features to maximize predictive performance.

- Modeling: The team explored various machine learning models, prioritizing accuracy metrics and selecting a Random Forest Classifier as the most suitable model for lift detection.

- Post-processing: They achieved a 96% accuracy rate with our Random Forest Classifier (RFC) model. Through the implementation of post-processing strategies, enhanced the continuity of classified events and minimized misclassifications. These refinements contribute to a more consistent user experience and improve overall accuracy.

- UX development: Their focus extended beyond algorithmic development to user experience enhancement. It resulted in plotting and mapping ski-lift events, simulating real-time predictions, and generating lift usage statistics.

- The AlpineSync App: The team created an App that serves as a Proof of Concept (POC) platform for visualization and interaction with our models.

Figure 1: AlpineSync App Screenshot

The future vision: The incorporation of mapping features by leveraging Google Maps APIs and continual refinement of the models remain key objectives. Future initiatives may include:

- Model training and validation using datasets from diverse geographical areas

- Validation of the pipeline with live data input

- Implementation of a clustering algorithm for grouping lift rides based on their origin and destination points

As we conclude this remarkable journey with Data Science Final Projects Group #24, we wish to extend our heartfelt gratitude to all the companies that have provided our students with invaluable projects. Your collaboration has not only enriched their learning experience but also paved the way for innovative solutions to real-world challenges. To the students who joined us in November and dedicated themselves wholeheartedly to completing the course and their final projects, we commend your outstanding efforts. Your dedication, skill, and passion for data science have truly shone through. We wish all the students the very best in their future endeavors. May you continue to push boundaries, innovate, and make a meaningful impact wherever your career may lead you.

For those inspired by these stories and interested in embarking on their own data science journey, we're excited to announce our upcoming bootcamp. Learn more about our data science program and how you can join the next cohort of students at Constructor Academy.